-

we use AMD-MI308 to run Qwen2.5-VL-32B(vLLM 0.9.2), it turns out that inference result is not we want.

-

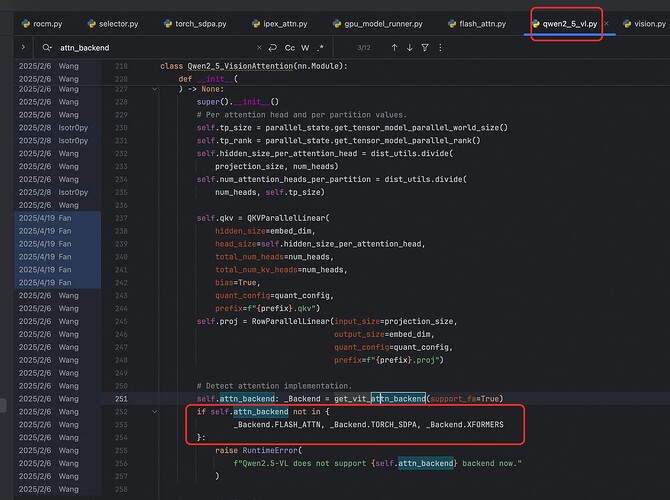

i checkout the source code, finding that qwen2_5_vl Qwen2_5_VisionAttention will use _Backend.TORCH_SDPA, but RocmPlatform doesn’t support _Backend.TORCH_SDPA. Is this the reason why it doesn’t work?If so, what should i do to solve this?

Yes, this is likely the reason for your issue. On AMD ROCm, the Qwen2_5_VisionAttention class in vLLM attempts to use _Backend.TORCH_SDPA, but the ROCm platform does not support this backend for vision attention. Instead, ROCm typically supports FLASH_ATTN (FlashAttention) or falls back to other supported backends, depending on device and software configuration. If the backend is not supported, a RuntimeError is raised, and inference will not work as expected. This is consistent with the logic in both the model and ROCm platform code you referenced (qwen2_5_vl.py, rocm.py).

To resolve this, you should ensure that a supported attention backend is available for vision attention on ROCm. For MI300 series GPUs, you should install and configure ROCm FlashAttention or Triton FlashAttention as described in the official vLLM ROCm installation guide. After installing, verify that the backend is correctly selected (e.g., FLASH_ATTN or TRITON_MLA). If you still encounter issues, you may need to update vLLM, ROCm, or the relevant attention libraries to ensure compatibility with your hardware and model. If only TORCH_SDPA is available and not supported, the model will not run correctly on ROCm.

Would you like more detail on how to install or configure the correct backend for your setup?

Sources:

We have added flash attention support in [FEAT][ROCm] Enable running Flash Attention as ViT attn backend for Q… · vllm-project/vllm@d3a6f21 · GitHub, please try it out!

FlashAttention support for Qwen2.5-VL models on ROCm was added in commit d3a6f2120bb6b67fc58a3f1000d624cfb351eb05. You should now be able to use FlashAttention as the backend for vision attention on AMD GPUs by updating to a vLLM version that includes this commit.

Would you like step-by-step instructions on how to enable and test this feature?

Sources:

sorry,i am a little bit confused now.

-

Now, we use vllm.attention.backends.rocm_flash_attn.ROCmFlashAttentionBackend in model and TORCH_SDPA in vit, is this the reason that cause problems with inference results?

-

the new commit seems to only add _Backend.ROCM_AITER_FA support in qwen2.5_vl vit? i wonder if this could solve our problem?

You are correct: the new commit adds support for the _Backend.ROCM_AITER_FA (AITER FlashAttention) backend in Qwen2.5-VL ViT, but does not add support for ROCmFlashAttentionBackend or fix issues with TORCH_SDPA on ROCm. If your current setup uses ROCmFlashAttentionBackend or TORCH_SDPA for ViT on ROCm, this may cause inference issues, as these backends are not fully supported or tested for Qwen2.5-VL vision attention on ROCm (see discussion).

Switching to the new _Backend.ROCM_AITER_FA backend (after installing AITER as described in the ROCm install guide) should resolve the problem, as this backend is now explicitly supported for Qwen2.5-VL ViT on ROCm. If you continue using TORCH_SDPA or ROCmFlashAttentionBackend, you may still encounter incorrect or unsupported behavior.

Would you like more detail on how to enable the AITER backend for your model?

Sources:

- Yes, flash attention is enabled for ViT which should solve the original error you posted.

- If you have AITER installed,

_Backend.ROCM_AITER_FAshould be selected automatically if you enable AITER viaVLLM_ROCM_USE_AITER=1

Correct. If you have AITER installed and set VLLM_ROCM_USE_AITER=1, the _Backend.ROCM_AITER_FA (AITER FlashAttention) backend will be selected automatically for ViT in Qwen2.5-VL on ROCm, resolving the original backend error (PR #22069). This ensures proper support and correct inference results.

Would you like more detail on verifying AITER installation or backend selection?

Sources:

Maybe i haven‘t describe our problems clearly.

we use vllm 0.9.2. When using a prompt to make the model describe image content, the inference results are completely unrelated to the actual image content.

The issue cannot be reproduced with a single request but stably occurs under concurrent requests.

The problem only manifests on AMD MI 308 hardware and does not occur on NVIDIA GPUs.

So, we think Potential flaws in the ROCmFlashAttention implementation under concurrent workloads.

Then, I found Qwen2_5_VisionAttention will use _Backend.TORCH_SDPA in default settings, I think maybe rocm having some issuses with TORCH_SDPA.

So i set envs.VLLM_ATTENTION_BACKEND=FLASH_ATTEN, Qwen2_5_VisionAttention will use _Backend.FLASH_ATTN, but the inference results still remain completely unrelated to the actual image content.

Your description matches a known issue: on AMD MI300 series with vLLM and Qwen2.5-VL, vision inference results can be incorrect or unrelated to the image content under concurrent requests, but not with single requests or on NVIDIA hardware. This is likely due to flaws or race conditions in the ROCm FlashAttention implementation or its integration with vLLM, especially under concurrency (see related issues, PR #22069).

Switching the backend to FLASH_ATTN or TORCH_SDPA does not resolve the issue, as both may have incomplete or unstable support for ViT on ROCm under concurrent workloads. This problem is not reported on NVIDIA, confirming it is ROCm-specific. There is no confirmed fix in vLLM 0.9.2; the recommended next steps are to (1) ensure you are using the latest ROCm stack and vLLM, (2) try enabling the AITER backend (VLLM_ROCM_USE_AITER=1), and (3) if the issue persists, report detailed logs to the vLLM GitHub for further investigation.

Would you like more detail on how to collect logs or test with AITER?

Sources: