Question: Why does computation time remain consistent across chunks in chunked-prefill despite linearly growing attention complexity?

Context:

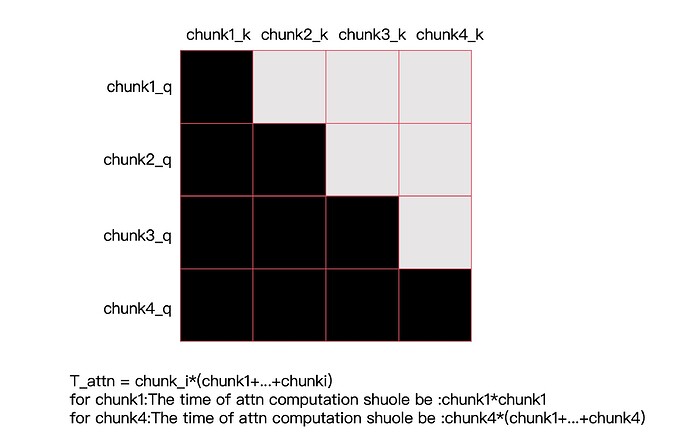

I’m profiling vLLM’s chunked-prefill optimization for long sequences (e.g., 8k tokens split into 4×2k chunks). Profiling shows near-identical computation time per chunk, but theoretically, attention complexity for later chunks (e.g., chunk 3) should grow linearly as they attend to all preceding KV history (chunks 0→3).

Below is my profiler screenshot for a 12k-token sequence processed via chunked-prefill with 6 chunks of 2048 tokens each

Key Observations:

-

Uniform chunk latency:

- Chunks 0→3 each take ~X ms (profiler data), despite chunk 3 requiring attention over 8k tokens.

- This contradicts the expected $O(n^2)$ attention cost growth for later chunks.

-

KV Cache behavior confirmed:

- Prefix caching is disabled; each chunk computes full attention over all prior KV.

- PagedAttention ensures global attention coverage (chunk 3 attends to chunks 0→3).

Suspected Optimizations:

I hypothesize vLLM mitigates latency growth via:

- Hardware-level parallelism: GPU tensor cores optimize large matrix multiplications ($Q \times K^T$), masking compute growth.

- Memory access optimization: PagedAttention’s contiguous block loading minimizes HBM latency for historical KV fetches.

- Compute-I/O overlap: Async prefetching of historical KV blocks during current chunk computation.

Core Question:

How does vLLM achieve constant-time chunk computation while maintaining mathematical equivalence to full-sequence attention? Specifically:

- Does vLLM implicitly limit attention scope (e.g., windowed attention)? [My tests confirm full attention.]

- Are there kernel-level optimizations (e.g., fused ops) that amortize linear complexity growth?

- Is the consistency an artifact of profiler measurement (e.g., batched execution masking latency)?